Leaders method for TQs

The leaders method for TQs is an adaptation of the leaders method for modal symbolic data for temporal quantities.

Slides from Sunbelt 2020.

Clustering large set using the leaders method

To cluster all 13332 words (nodes) in Terror news I used an adapted leaders method searching for 100 clusters:

wdir = "C:/Users/batagelj/work/Python/graph/Nets/clusTQ"

gdir = 'c:/users/batagelj/work/python/graph/Nets'

import sys, os, re, datetime, json

sys.path = [gdir]+sys.path; os.chdir(wdir)

from TQ import *

from Nets import Network as N

from numpy import random

import numpy as np

from copy import copy, deepcopy

import collections

def table(arr): return collections.Counter(arr)

def timer(): return datetime.datetime.now().ctime()

def unitTQ(nVar): return [ 'unit', [[[], 0]] * nVar ]

# unit = ['unit',[[[],0],[[],0]]]

infinity = float('inf')

# computes weighted squared Euclidean dissimilarity between TQs

def distTQ(X,T,nVar,alpha):

global infinity

d = 0

for i in range(nVar):

nX = X[i][1]; nT = T[i][1]

if nT<=0: return infinity

s = TQ.sum(TQ.prodConst(X[i][0],1/max(1,nX)),

TQ.prodConst(T[i][0],-1/max(1,nT)))

d = alpha[i]*nX*TQ.total(TQ.prod(s,s))

# if is.na(d): return(infinity)

return d

# adapted leaders method

#---------------------------------------------

# VB, 16. julij 2010

# dodaj omejitev na najmanjše število enot v skupini omejeni polmer

# VB, 1. February 2013: added: time, initial clustering

# Python version: VB, 26. June 2020

#

def leaderTQ(TQs,maxL,nVar,alpha,clust=None,trace=2,tim=1,empty=0):

global unit

numTQ = len(TQs)

L = [ deepcopy(unit) for i in range(maxL)]

Ro = np.zeros(maxL); K = np.zeros(maxL,dtype=int)

# if not given, random partition into maxL clusters

if clust is None:

clust = np.random.choice(np.array(range(1,maxL-empty+1)),size=numTQ)

step = 0; print("LeaderTQ:",timer(),"\n\n")

while tim > 0:

step = step+1; K.fill(0)

# new leaders - determine the leaders of clusters in current partition

for j in range(maxL): L[j][0] = "L"+str(j+1)

for i in range(numTQ):

j = clust[i]-1

for k in range(nVar):

L[j][1][k][0] = TQ.sum(L[j][1][k][0],TQs[i][1][k][0])

L[j][1][k][1] = L[j][1][k][1]+TQs[i][1][k][1]

# new partition - assign each unit to the nearest new leader

clust.fill(0)

R = np.zeros(maxL); p = np.zeros(maxL); d = np.zeros(maxL)

for i in range(numTQ):

for k in range(maxL): d[k] = distTQ(TQs[i][1],L[k][1],nVar,alpha)

j = np.argmin(d); r = d[j]

if r == infinity:

print("Infinite unit=",i,"\n"); print(TQs[i])

clust[i] = j+1; p[j] = p[j] + r

if R[j]<r: R[j] = r; K[j] = i

# report

if trace>0: print("Step",step,timer())

delta = [ R[i]-Ro[i] for i in range(maxL) ]

if trace>1:

print(table(clust)); print(R); print(delta); print(p)

if trace>0: print("P =",sum(p))

if sum([abs(a) for a in delta])<0.0000001: break

Ro = R; tim = tim-1

if tim<1:

print(table(clust)); print(R); print(delta); print(p); print("P =",sum(p))

tim = int(input("Times repeat = :\n")); print(f'You entered {tim}')

# TO DO: in the case of empty clusters use the most distant TQs as seeds

return { 'proc':"leaderTQ", 'clust':clust.tolist(), 'leaders':L,

'R':R.tolist(), 'p':p.tolist() }

G = N.loadNetsJSON("C:/Users/batagelj/work/Python/graph/JSON/terror/terror.json")

G.Info()

nVar = 1; alpha = [1]; unit = unitTQ(nVar)

Ter = [[G._nodes[u][3]['lab'], [[G.TQnetSum(u), TQ.total(G.TQnetSum(u))]]]\

for u in G.nodes() ]

Rez = leaderTQ(Ter,100,nVar,alpha,trace=1,tim=5)

js = open("Terror100.json",'w'); json.dump(Rez, js, indent=1); js.close()

Tot = [ (t[0],t[1][0][1]) for t in Ter ]

js = open("Totals.json",'w'); json.dump(Tot, js, indent=1); js.close()

An iteration takes around 7 minutes. I stoped after 50 iterations.

>>>

= RESTART: C:/Users/batagelj/work/Python/graph/Nets/clusTQ/startLeadersTQ.py =

network: Terror

Terror news

simple= False directed= False org= 1 mode= 1 multirel= False temporal= True

nodes= 13332 links= 243447 arcs= 0 edges= 243447

Tmin= 1 Tmax= 67

>>> Rez = leaderTQ(Ter,100,nVar,alpha,trace=1,tim=5)

LeaderTQ: Fri Jun 26 16:17:11 2020

Step 1 Fri Jun 26 16:24:03 2020 P = 41012.94437438724

Step 2 Fri Jun 26 16:31:00 2020 P = 34816.987910189026

Step 3 Fri Jun 26 16:38:02 2020 P = 32237.7188229329

Step 4 Fri Jun 26 16:45:00 2020 P = 30828.419469529275

Step 5 Fri Jun 26 16:51:42 2020 P = 29892.127425480423

Step 6 Fri Jun 26 16:59:51 2020 P = 29194.59947427378

Step 7 Fri Jun 26 17:07:38 2020 P = 28641.034731738484

Step 8 Fri Jun 26 17:15:05 2020 P = 28192.488239481285

Step 9 Fri Jun 26 17:22:39 2020 P = 27821.84173801013

Step 10 Fri Jun 26 17:30:10 2020 P = 27504.183547832534

Step 11 Fri Jun 26 17:42:01 2020 P = 27228.52518355242

Step 12 Fri Jun 26 17:49:49 2020 P = 26981.33179788163

Step 13 Fri Jun 26 17:57:05 2020 P = 26762.369836208803

Step 14 Fri Jun 26 18:04:19 2020 P = 26568.593619232168

Step 15 Fri Jun 26 18:11:31 2020 P = 26395.62563760305

Step 16 Fri Jun 26 18:28:22 2020 P = 26236.897995694795

Step 17 Fri Jun 26 18:34:46 2020 P = 26088.981052957482

Step 18 Fri Jun 26 18:41:47 2020 P = 25951.57575586071

Step 19 Fri Jun 26 18:48:18 2020 P = 25821.565883472253

Step 20 Fri Jun 26 18:56:15 2020 P = 25700.920951763186

Step 21 Fri Jun 26 19:32:54 2020 P = 25588.025305462783

Step 22 Fri Jun 26 19:40:06 2020 P = 25482.561165467723

Step 23 Fri Jun 26 19:47:15 2020 P = 25383.475342625457

Step 24 Fri Jun 26 19:54:18 2020 P = 25289.74904593362

Step 25 Fri Jun 26 20:01:28 2020 P = 25200.29701984593

Step 26 Fri Jun 26 20:08:52 2020 P = 25115.85704328531

Step 27 Fri Jun 26 20:15:53 2020 P = 25035.978863889348

Step 28 Fri Jun 26 20:21:26 2020 P = 24960.26125292126

Step 29 Fri Jun 26 20:27:12 2020 P = 24888.40536113275

Step 30 Fri Jun 26 20:33:05 2020 P = 24819.5120831468

Step 31 Fri Jun 26 20:48:18 2020 P = 24753.51883880618

Step 32 Fri Jun 26 20:54:15 2020 P = 24690.59524831267

Step 33 Fri Jun 26 21:00:15 2020 P = 24630.632428710665

Step 34 Fri Jun 26 21:06:20 2020 P = 24573.316475718257

Step 35 Fri Jun 26 21:12:16 2020 P = 24518.476655021597

Step 36 Fri Jun 26 21:18:13 2020 P = 24465.91176940239

Step 37 Fri Jun 26 21:24:21 2020 P = 24415.60237886584

Step 38 Fri Jun 26 21:30:14 2020 P = 24367.21115117285

Step 39 Fri Jun 26 21:36:06 2020 P = 24321.04265894216

Step 40 Fri Jun 26 21:41:53 2020 P = 24276.783664228875

Step 41 Fri Jun 26 21:48:15 2020 P = 24233.947850857297

Step 42 Fri Jun 26 21:54:34 2020 P = 24191.81422517343

Step 43 Fri Jun 26 22:00:42 2020 P = 24150.937895330193

Step 44 Fri Jun 26 22:06:38 2020 P = 24111.37840853657

Step 45 Fri Jun 26 22:12:30 2020 P = 24073.299354247145

Step 46 Fri Jun 26 22:18:13 2020 P = 24036.467443988044

Step 47 Fri Jun 26 22:23:53 2020 P = 24000.781097476924

Step 48 Fri Jun 26 22:29:36 2020 P = 23965.342450356835

Step 49 Fri Jun 26 22:35:20 2020 P = 23930.986409803205

Step 50 Fri Jun 26 22:40:58 2020 P = 23897.74102650508

Counter({74: 716, 43: 535, 82: 378, 2: 372, 9: 338, 69: 325, 96: 307, 46: 307, 100: 291, 26: 275, 62: 257,

13: 241, 85: 238, 81: 237, 34: 233, 98: 229, 27: 228, 29: 222, 19: 203, 22: 199, 10: 196, 88: 195, 37: 192,

12: 191, 30: 186, 54: 183, 72: 180, 66: 177, 14: 175, 33: 172, 25: 162, 23: 162, 92: 156, 71: 155, 93: 153,

87: 152, 51: 151, 24: 150, 16: 148, 58: 146, 59: 146, 47: 145, 63: 143, 61: 141, 4: 139, 45: 132, 89: 130,

8: 128, 17: 126, 56: 114, 50: 105, 77: 102, 42: 101, 1: 96, 55: 96, 97: 95, 53: 91, 94: 89, 5: 87, 70: 83,

90: 81, 44: 76, 3: 75, 60: 74, 40: 73, 52: 64, 15: 63, 84: 56, 20: 51, 57: 45, 64: 44, 67: 44, 65: 43,

21: 39, 31: 37, 11: 37, 39: 36, 79: 35, 38: 35, 36: 34, 76: 32, 91: 32, 99: 32, 80: 31, 73: 31, 68: 31,

7: 29, 6: 29, 35: 29, 28: 28, 49: 27, 86: 26, 78: 23, 83: 18, 41: 18, 32: 18, 95: 15, 18: 14, 48: 13, 75: 12})

[ 4.05454376 11.62439994 5.35090521 10.21779738 5.38488086 4.88804169

6.45439318 4.47221373 6.82371194 3.86190616 6.63030857 10.48803867

8.50043207 12.85720235 6.88622577 9.64618704 5.53821957 3.42654986

14.24940428 8.34854049 5.94249298 4.1781549 8.24872225 6.83374884

5.16954444 8.70064723 6.59041566 16.58978487 5.74940007 6.40942257

5.56578619 4.98598796 5.50435068 12.1487182 4.98507279 15.17351806

8.5270313 7.25867952 7.46223888 8.54987284 4.29491669 6.82907384

6.32639067 8.72948019 34.68741951 10.18998296 4.66936744 3.95455431

7.28913629 5.29245958 6.03686358 8.35563046 7.32016579 8.85448954

7.31476439 8.50948135 4.54601622 5.36978028 6.10221564 10.6051472

7.52906457 12.99379712 5.5123456 5.8265611 28.44130374 4.28100739

8.9826142 6.71189291 4.08105717 7.96997566 14.47667431 3.35765732

5.58266745 5.16368754 5.84057527 5.16339998 11.07494086 5.71103674

5.75381237 6.34762824 6.48023627 17.33144425 3.02270416 5.11445702

8.99156091 4.34078924 6.18525871 13.81718416 5.29489383 6.22697094

6.5847424 7.09876027 12.36251002 4.8312286 7.8537402 6.00655082

6.36702895 14.67459373 6.44346647 9.41246575]

[-0.025928334494264682, 0.1144546869871288, -0.0013472481771064082, -0.031927777482763986,

-0.004313016897881106, -0.00699245673647475, -0.0015729675097126972, 0.002791108727578262,

-0.015176309951074884, 0.02612313950531675, -0.005059892097893481, -0.018538779587036203,

-0.04524243224014768, 0.008081271659635902, -0.02865593821978507, 0.03928248087084896,

0.0009489417535606393, -0.0012566040340504792, 0.032870339083677536, -0.006793812450089476,

-0.006364208717405617, 0.0009074757369518238, 0.0256754633622851, 0.008267497990432027,

-0.021718770361842665, -0.0062467886607109335, -0.016722265790322766, -0.013430319719322625,

0.007584756964511996, -0.014342401232742219, 0.00143150338578657, -0.00046478858316501004,

0.010880087870412503, 0.001978338664413215, -0.001197435634706423, -0.07678613834789694,

-0.0005183982789738195, -0.17664520115540938, -0.0033090603093270943, -0.003914506240528581,

-0.0024963667913526777, -0.004867813612547955, -0.003569978857913547, -0.0023515446784792005,

0.09853319878786237, -0.015712379286672018, -0.004952953796402149, -0.01716893317577206,

0.00011531243961737658, 0.01859581043121228, -0.03594316395810537, -0.020929434316045104,

0.029233701526942824, -0.0004918877741264538, 0.014664958394515537, 0.04279175364574073,

-0.01421079569809347, -0.007050769142508351, -0.00024851183018359535, -0.003361476597673274,

-0.01181351026306654, 0.062419526846387186, 0.002740524162542002, -0.0008589121273026024,

-0.18451054251177013, 0.016661863674276134, -0.029763920014746148, -0.010861542748953568,

0.017336790725751783, -0.003030324323872513, 0.02388870113427366, -0.0023898562698021486,

-0.008281013672157833, 0.001204656988305075, -0.0035249214065657952, -0.005028994067608927,

-0.009143598388741125, 0.0001842641666431888, -0.003246097173422058, -0.006093907263841736,

0.028720021825460407, 0.01906446383464555, -0.009965711589375648, 0.0012965051648876624,

0.05214469907609676, -0.0029059085015017416, -0.0002010797496776462, -0.0669201499911729,

-0.006648472563363761, -0.0008849414295104552, -0.007582636995611125, -0.050714795904602816,

0.02322280274769284, 0.009402623811269883, -0.0042092851501269735, -0.03256114232137097,

-0.03782291329565357, 0.011091031786282457, -0.007262363740834665, 0.05582725861848381]

[138.28608428 423.02266993 233.55764966 320.07686232 213.95084233

78.82766239 91.04522391 145.43240218 377.9023316 209.89528724

118.97389699 435.4533143 491.59888304 357.89294698 188.47771676

191.65846628 229.64649407 34.00702841 512.27160155 139.4013752

94.61029863 223.29604307 275.04798873 201.44927137 275.12977501

512.9267657 435.17907035 98.28680013 246.06871613 290.74591877

98.72285522 48.19460558 175.27298408 688.2778248 80.3979748

104.76666333 594.72455405 86.36484467 113.81693121 191.61051743

45.56410756 259.00567566 619.47869708 207.99498474 316.21555459

543.28895417 178.53457517 32.91495765 79.91451842 248.03106489

215.47006128 206.0436381 198.8648244 419.11799502 256.74663038

237.16272076 130.52070509 306.52774164 249.64976942 256.20193799

208.88057602 594.4279203 206.29752855 134.87142488 147.09432065

202.68372227 131.36981843 86.33949165 288.52857689 295.41117951

382.58354633 189.09040856 93.62595345 507.10461038 39.7513201

85.34958462 299.1605274 70.2400814 107.83892673 81.99345351

308.70222024 665.46987893 37.33853759 153.12982178 238.10338717

64.73530928 185.93473351 421.76347936 288.03187434 204.24181085

86.2254489 226.43184761 272.55139341 166.03266799 42.05405584

488.25385889 149.63468163 420.37872852 94.72800245 457.83806006]

P = 23897.74102650508

Times repeat = :

0

You entered 0

>>>

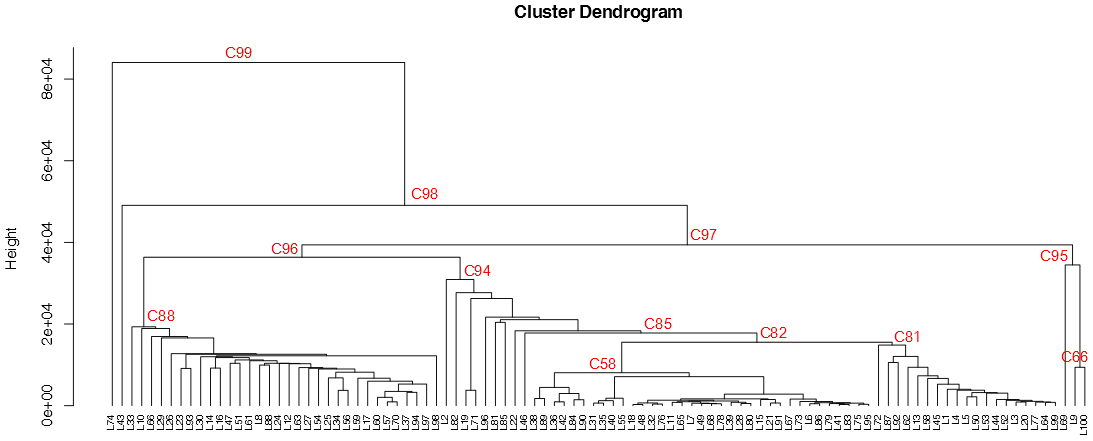

Hierarchical clustering of leaders

Hierarchical clustering of TQs

HC = hclusTQ(Rez['leaders'],nVar,alpha)

js = open("TerrorHC.json",'w'); json.dump(HC, js, indent=1); js.close()

> js <- "TerrorHC.json" > R <- fromJSON(js) > attr(R,"class") <- "hclust" > plot(R,hang=-1,cex=0.7)

> library(wordcloud)

> js <- "Terror100.json"

> Rez <- fromJSON(js)

> js <- "Totals.json"

> Tot <- fromJSON(js)

> names(R)

[1] "proc" "merge" "height" "order" "labels" "method"

[7] "call" "dist.method" "leaders"

> names(Rez)

[1] "proc" "clust" "leaders" "R" "p"

> unitTQ <- function(unit){

+ total <- unit[[1]][[2]][[1]][[2]]

+ TQ <- unit[[1]][[2]][[1]][[1]]

+ name <- unit[[1]][[1]]

+ TQ[,3] <- TQ[,3]/total

+ return(list(name,TQ))

+ }

> L = R$leaders

> W = Tot[,1]

> F = as.integer(Tot[,2])

> C = Rez$clust

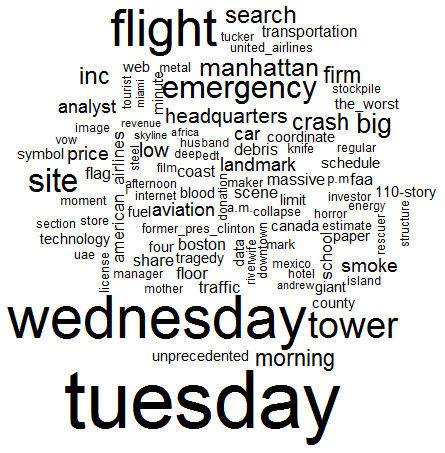

> c95 <- C %in% c(9,69,100)

> sum(c95)

[1] 954

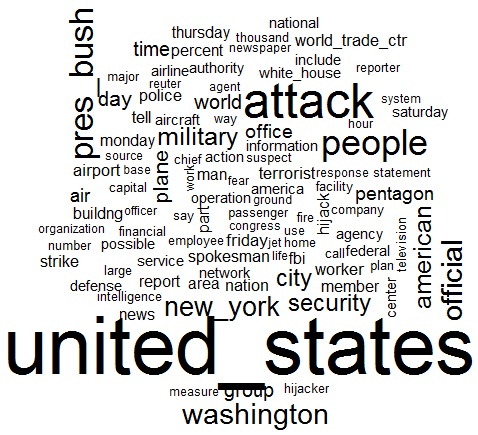

> wordcloud(W[c95],F[c95],scale=c(5,.5),max.words=100)

> L74 <- C %in% c(74)

> sum(L74)

[1] 716

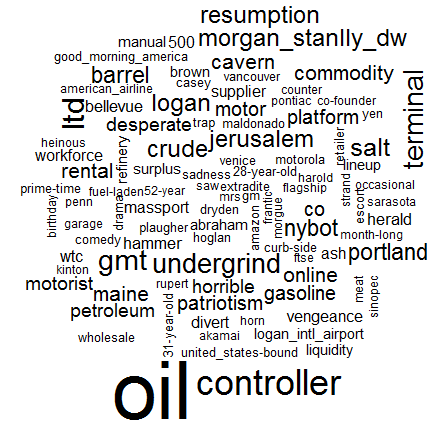

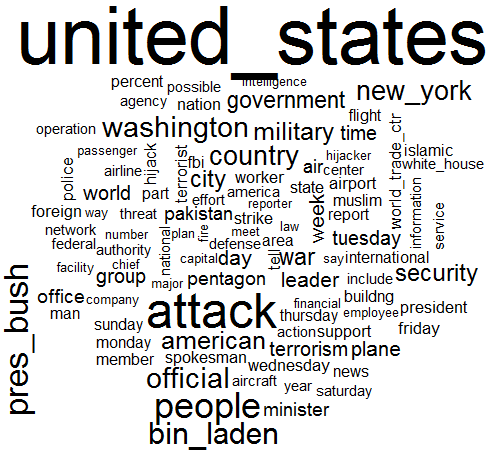

> wordcloud(W[L74],F[L74],scale=c(5,.5),max.words=100)

> L43 <- C %in% c(43)

> sum(L43)

[1] 535

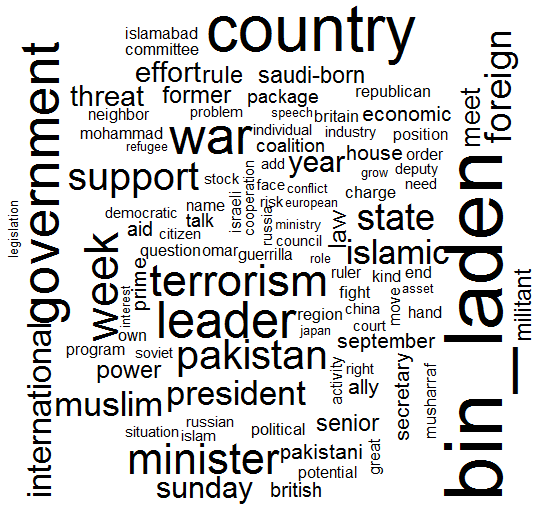

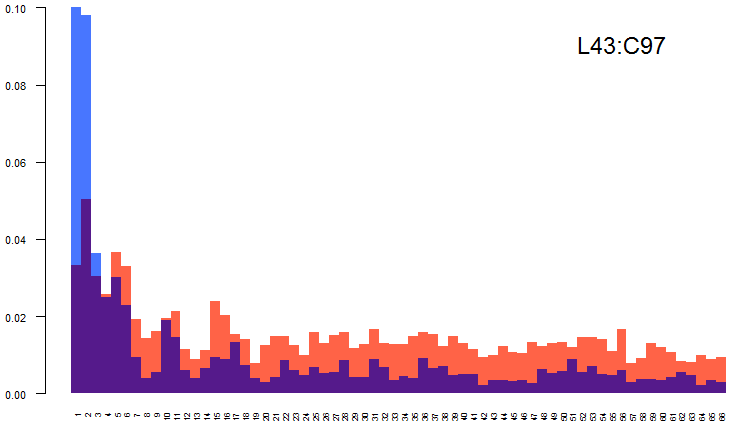

> wordcloud(W[L43],F[L43],scale=c(5,.5),max.words=100)

> L46 <- C %in% c(46)

> sum(L46)

[1] 307

> wordcloud(W[L46],F[L46],scale=c(5,.5),max.words=100)

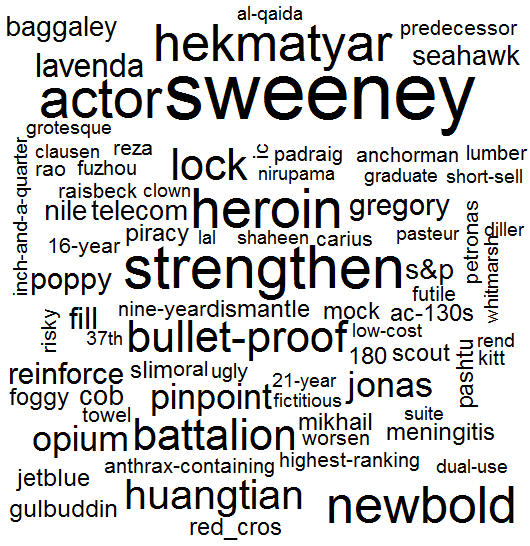

> C58 <- C %in% c(38,89,36,42,84,90,31,35,40,55,18,48,32,76,11,65,7,49,68,78,39,28,80,

15,21,91,67,73,6,86,79,41,83,75,95)

> sum(C58)

[1] 1396

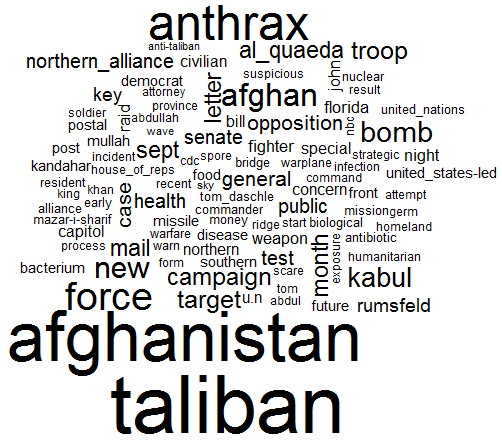

> wordcloud(W[C58],F[C58],scale=c(5,.5),max.words=100)

> C81 <- C %in% c(72,87,92,62,13,58,45,1,4,5,50,53,44,52,3,20,77,64,99)

> sum(C81)

[1] 2226

> wordcloud(W[C81],F[C81],scale=c(5,.5),max.words=100)

> C88 <- C %in% c(33,10,66,29,26,23,93,30,14,16,47,51,61,8,88,24,12,63,27,54,25,34,56,

59,17,60,57,70,37,94,97,98)

> sum(C88)

[1] 5109

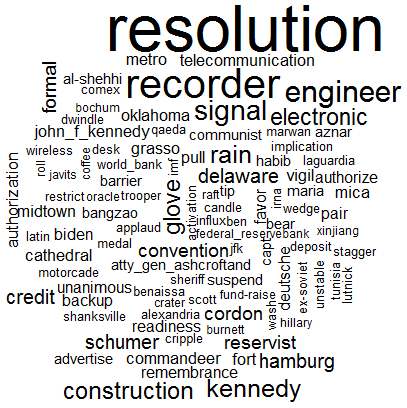

> wordcloud(W[C88],F[C88],scale=c(5,.5),max.words=100)

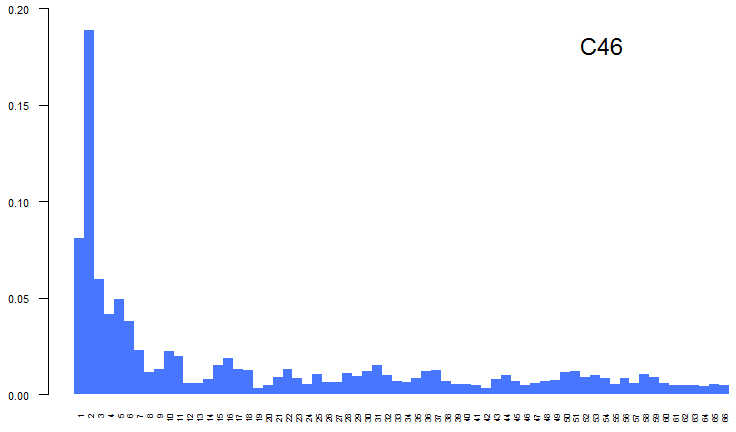

> C46 <- C %in% c(19,71)

> sum(C46)

[1] 358

> wordcloud(W[C46],F[C46],scale=c(5,.5),max.words=100)

WCL74, WCL43

WCL46, WCC46

WCC58, WCC81

WCC88, WCC95

WCC94

L74 [2,] 2 3 0.589369496

> source("https://raw.githubusercontent.com/bavla/Nets/master/source/hist.R")

> LL <- Rez$leaders; CC <- R$leaders

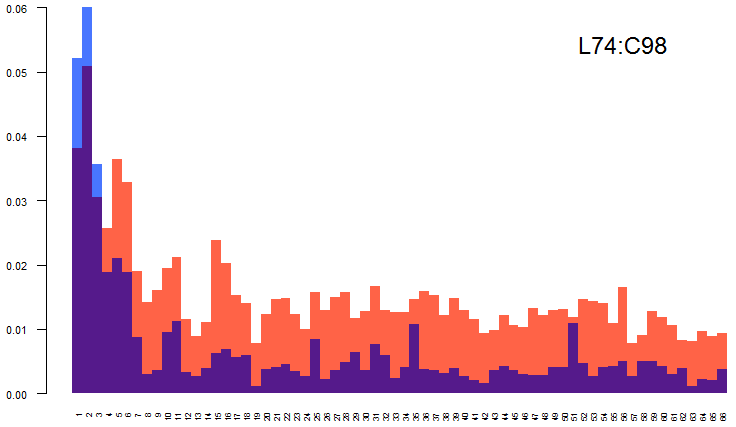

> coHist(unitTQ(LL[74]),unitTQ(CC[98]),1,66,lab="L74:C98",ylim=c(0,0.06),cex.names=0.5,cex.lab=1.5,xlab=50)

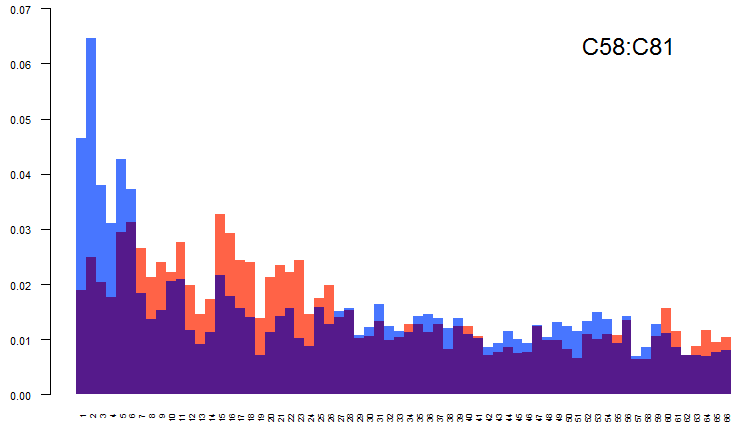

> coHist(unitTQ(CC[58]),unitTQ(CC[81]),1,66,lab="C58:C81",ylim=c(0,0.07),cex.names=0.5,cex.lab=1.5,xlab=50)

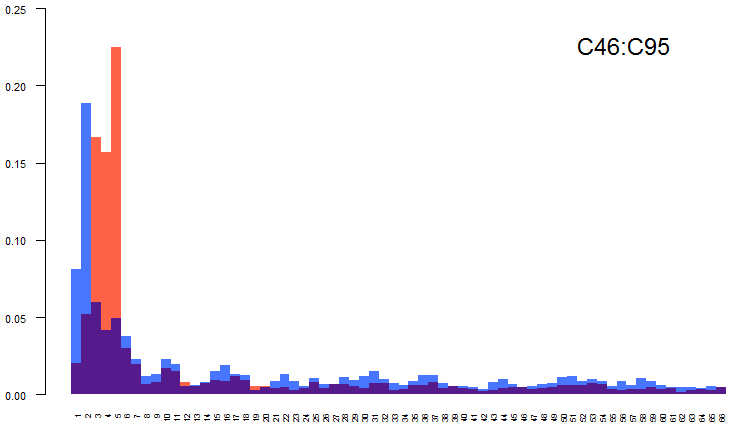

> coHist(unitTQ(CC[46]),unitTQ(CC[95]),1,66,lab="C46:C95",ylim=c(0,0.25),cex.names=0.5,cex.lab=1.5,xlab=50)

> siHist(unitTQ(CC[46]),1,66,TRUE,ylim=c(0,0.20),cex.names=0.5,cex.lab=1.5,col="royalblue1",xlab=50)

> coHist(unitTQ(LL[43]),unitTQ(CC[97]),1,66,lab="L43:C97",ylim=c(0,0.20),cex.names=0.5,cex.lab=1.5,xlab=50)

[1,] 1 2 0.439295134

[2,] 2 3 0.098072566

> unitTQ(CC[95])

[3,] 3 4 0.166539651

[4,] 4 5 0.156973537

[5,] 5 6 0.225025874

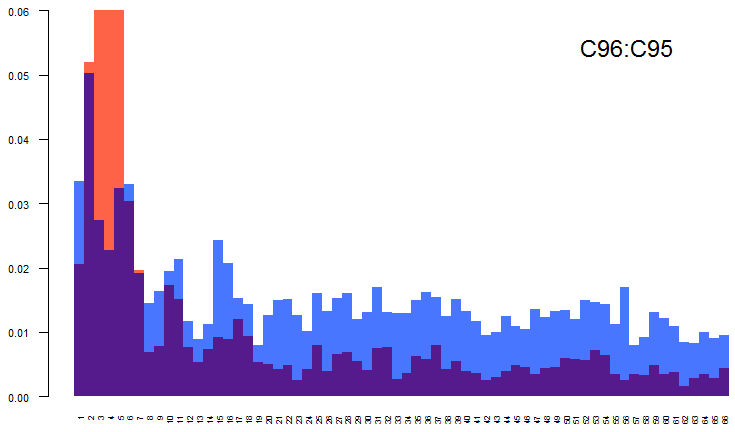

> coHist(unitTQ(CC[96]),unitTQ(CC[95]),1,66,lab="C96:C95",ylim=c(0,0.06),cex.names=0.5,cex.lab=1.5,xlab=50)

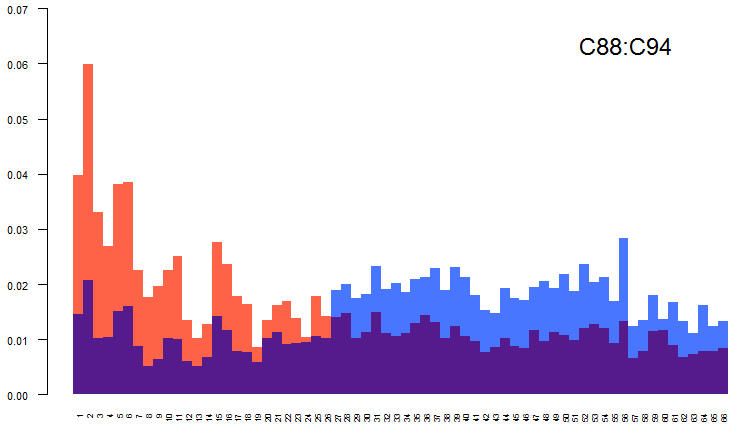

> coHist(unitTQ(CC[88]),unitTQ(CC[94]),1,66,lab="C88:C94",ylim=c(0,0.07),cex.names=0.5,cex.lab=1.5,xlab=50)

> X <- unitTQ(LL[96])

> unitTQ(CC[66])[[2]][1:6,]

[,1] [,2] [,3]

[1,] 1 2 0.022506687

[2,] 2 3 0.050189264

[3,] 3 4 0.039212227

[4,] 4 5 0.196113024

[5,] 5 6 0.291704826

[6,] 6 7 0.034845800

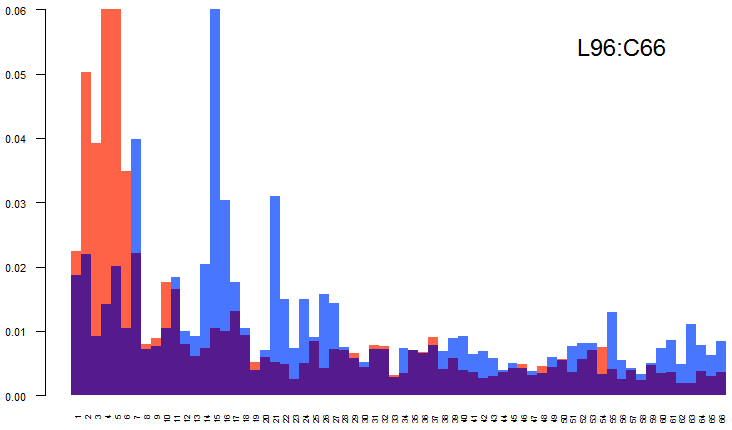

> coHist(unitTQ(LL[96]),unitTQ(CC[66]),1,66,lab="L96:C66",ylim=c(0,0.06),cex.names=0.5,cex.lab=1.5,xlab=50)

L74 [1,] 1 2 0.052054299 [2,] 2 3 0.589369496 L43 [1,] 1 2 0.439295134 [2,] 2 3 0.098072566

C95 [3,] 3 4 0.166539651 [3,] 3 4 0.166539651 [4,] 4 5 0.156973537 [5,] 5 6 0.225025874

L96 [15,] 15 16 0.352360449 ; C66 [4,] 4 5 0.196113024 [5,] 5 6 0.291704826

Using package clusTQ

I joined leaders method and hierarchical clustering method in package clusTQ

wdir = "C:/Users/batagelj/work/Python/graph/Nets/clusTQ"

gdir = 'c:/users/batagelj/work/python/graph/Nets'

import sys, os, re, datetime, json

sys.path = [gdir]+sys.path; os.chdir(wdir)

from TQ import *

from Nets import Network as N

import clusTQ as cl

G = N.loadNetsJSON("C:/Users/batagelj/work/Python/graph/JSON/terror/terror.json")

G.Info()

nVar = 1; alpha = [1]

Ter = [[G._nodes[u][3]['lab'], [[G.TQnetSum(u), TQ.total(G.TQnetSum(u))]]]\

for u in G.nodes() ]

# Rez = cl.leaderTQ(Ter,100,nVar,alpha,trace=1,tim=5)

# js = open("Terror100.json",'w'); json.dump(Rez, js, indent=1); js.close()

# Tot = [ (t[0],t[1][0][1]) for t in Ter ]

# js = open("Totals.json",'w'); json.dump(Tot, js, indent=1); js.close()

# HC = cl.hclusTQ(Rez['leaders'],nVar,alpha)

# js = open("TerrorHC.json",'w'); json.dump(HC, js, indent=1); js.close()

Post analysis of saved data

Reading saved data in Python

wdir = "C:/Users/batagelj/work/Python/graph/Nets/clusTQ"

gdir = 'c:/users/batagelj/work/python/graph/Nets'

import sys, os, re, datetime, json

sys.path = [gdir]+sys.path; os.chdir(wdir)

from TQ import *

from Nets import Network as N

from numpy import random

import numpy as np

from copy import copy, deepcopy

import collections

def table(arr): return collections.Counter(arr)

with open("TerrorHC.json") as json_file: R = json.load(json_file)

with open("Terror100.json") as json_file: Rez = json.load(json_file)

with open("Totals.json") as json_file: Tot = json.load(json_file)

R.keys()

# dict_keys(['proc', 'merge', 'height', 'order', 'labels', 'method', 'call', 'dist.method', 'leaders'])

Rez.keys()

# dict_keys(['proc', 'clust', 'leaders', 'R', 'p'])

G = N.loadNetsJSON("C:/Users/batagelj/work/Python/graph/JSON/terror/terror.json")

G.Info()

Reading saved data in R

and creating a cut partition into 5 clusters from the hierarchy

wdir <- "C:/Users/batagelj/work/Python/graph/Nets/clusTQ" library(rjson) setwd(wdir) js <- "TerrorHC.json"; R <- fromJSON(file=js); attr(R,"class") <- "hclust" js <- "Terror100.json"; Rez <- fromJSON(file=js) js <- "Totals.json"; Tot <- fromJSON(file=js) R$merge <- matrix(unlist(R$merge),nrow=99,ncol=2,byrow=TRUE) p <- cutree(R,k=5) as.vector(p) [1] 1 1 1 1 1 1 1 2 3 2 1 2 1 2 1 2 2 1 1 1 1 1 2 2 2 2 2 1 2 2 1 1 2 2 1 1 2 1 1 1 [41] 1 1 4 1 1 1 2 1 1 1 2 1 1 2 1 2 2 1 2 2 2 1 2 1 1 2 1 1 3 2 1 1 1 5 1 1 1 1 1 1 [81] 1 1 1 1 1 1 1 2 1 1 1 1 2 2 1 1 2 2 1 3 table(Rez$clust) 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 96 372 75 139 87 29 29 128 338 196 37 191 241 175 63 148 126 14 203 51 39 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 199 162 150 162 275 228 28 222 186 37 18 172 233 29 34 192 35 36 73 18 101 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 535 76 132 307 145 13 27 105 151 64 91 183 96 114 45 146 146 74 141 257 143 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 44 43 177 44 31 325 83 155 180 31 716 12 32 102 23 35 31 237 378 18 56 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 238 26 152 195 130 81 32 156 153 89 15 307 95 229 32 291 q <- p[Rez$clust] table(q) 1 2 3 4 5 6018 5109 954 535 716 c94 <- as.vector((1:100)[p==1]) C94 <- C %in% c94 wordcloud(W[C94],F[C94],scale=c(5,.5),max.words=100)

I manually converted the partition p into Python assignment and computed the corresponding partition of units C

>>> p5 = [ 1, 1, 1, 1, 1, 1, 1, 2, 3, 2, 1, 2, 1, 2, 1, 2, 2, 1, 1, 1,

1, 1, 2, 2, 2, 2, 2, 1, 2, 2, 1, 1, 2, 2, 1, 1, 2, 1, 1, 1,

1, 1, 4, 1, 1, 1, 2, 1, 1, 1, 2, 1, 1, 2, 1, 2, 2, 1, 2, 2,

2, 1, 2, 1, 1, 2, 1, 1, 3, 2, 1, 1, 1, 5, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 2, 1, 1, 1, 1, 2, 2, 1, 1, 2, 2, 1, 3 ]

>>> q = [ p5[i-1] for i in Rez['clust'] ]

>>> table(q)

Counter({1: 6018, 2: 5109, 3: 954, 5: 716, 4: 535})

>>> C = [[] for r in range(5)]

>>> for i in range(1,6): C[i-1] = [ v for v in G.nodes() if q[v-1]==i ]

>>> Nam = [ 'C94', 'C88', 'C95', 'L43', 'L74' ]

>>> Num = [ len(C[i]) for i in range(5) ]

>>> Num

[6018, 5109, 954, 535, 716]

>>> M = [[[] for r in range(5)] for s in range(5)]

>>> T = [[0 for r in range(5)] for s in range(5)]

>>> for r in range(5):

for s in range(r,5):

M[r][s] = G.TQactivity(C[r],C[s])

>>> for r in range(5): M[r][r] = TQ.prodConst(M[r][r],1/2)

>>> for r in range(5):

for s in range(r,5):

T[r][s] = TQ.total(M[r][s])

>>> T

[[143549, 67801, 5422, 2816, 2939],

[ 0, 18288, 739, 357, 357],

[ 0, 0, 535, 53, 54],

[ 0, 0, 0, 205, 51],

[ 0, 0, 0, 0, 281]]

>>> Q = [[0 for r in range(5)] for s in range(5)]

>>> for r in range(5):

for s in range(r,5):

Q[r][s] = T[r][s]/sqrt(len(C[r])*len(C[s]))

>>> Q

[[23.85, 12.23, 2.26, 1.57, 1.42],

[ 0, 3.58, 0.33, 0.22, 0.19],

[ 0, 0, 0.56, 0.07, 0.07],

[ 0, 0, 0, 0.38, 0.08],

[ 0, 0, 0, 0, 0.39]]

Saving BM in JSON

>>> BM = []

>>> for r in range(5):

for s in range(r,5):

BM.append([ Nam[r]+'-'+Nam[s], [[M[r][s], TQ.total(M[r][s])]]])

>>> js = open("BM.json",'w'); json.dump(BM, js, indent=1); js.close()

Drawing BM TQs in R

> unitTQ <- function(unit){

+ total <- unit[[1]][[2]][[1]][[2]]

+ TQ <- matrix(unlist(unit[[1]][[2]][[1]][[1]]),ncol=3,byrow=TRUE)

+ name <- unit[[1]][[1]]

+ TQ[,3] <- TQ[,3]/total

+ return(list(name,TQ))

+ }

> js <- "BM.json"; BM <- fromJSON(file=js)

> for(b in 1:15){

+ siHist(unitTQ(BM[b]),1,66,TRUE,ylim=c(0,0.07),cex.names=0.5,cex.lab=1.5,col="royalblue1",xlab=50,PDF=TRUE)

+ # ans <- readline(prompt=paste(str(b),". Press enter to continue"))

+ }